Introduction

As of the dynamic nature of cloud-native applications, managing storage on Kubernetes can be a challenging task. With workloads that can move between nodes or can scale up and down instantly, this becomes more challenging. To address this issue Kubernetes came up with managed storage with Persistent volumes (PV) and Persistent volume claims (PVC). With more development came Container Storage Interfaces (CSI) that can help to automate this process. CSI is responsible for Provisioning of volumes, mounting, unmounting, and removal of volumes, even snapshotting of volumes.

In this Blog we will go through process of creation of ReadWriteMany (RWX) type PV on Civo.

Let’s get started

Note: We are using and supporting HELM 3.0 and above.

Step 1 — Deploying the NFS Server with Helm

To deploy the NFS server, you will use a Helm chart. Deploying a Helm chart is an automated solution that is faster and less error-prone than creating the NFS server deployment by hand.

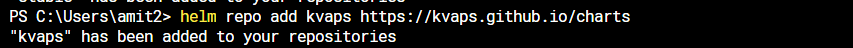

First, make sure that the default chart repository stable is available to you by adding the repo:

$ helm repo add kvaps https://kvaps.github.io/charts

Next, pull the metadata for the repository you just added. This will ensure that the Helm client is updated:

$ helm repo update

To verify access to the stable repo, perform a search on the charts:

This result means that your Helm client is running and up-to-date.

Now that you have Helm set up, install the nfs-server-provisioner Helm chart to set up the NFS server. If you would like to examine the contents of the chart, take a look at its documentation on GitHub.

When you deploy the Helm chart, you are going to set a few variables for your NFS server to further specify the configuration for your application. You can also investigate other configuration options and tweak them to fit the application’s needs.

To install the Helm chart, use the following command:

$ helm install nfs-server kvaps/nfs-server-provisioner --version 1.3.0 --set persistence.enabled=true,persistence.size=10Gi

This command provisions an NFS server with the following configuration options:

- Adds a persistent volume for the NFS server with the

--setflag. This ensures that all NFS shared data persists across pod restarts.

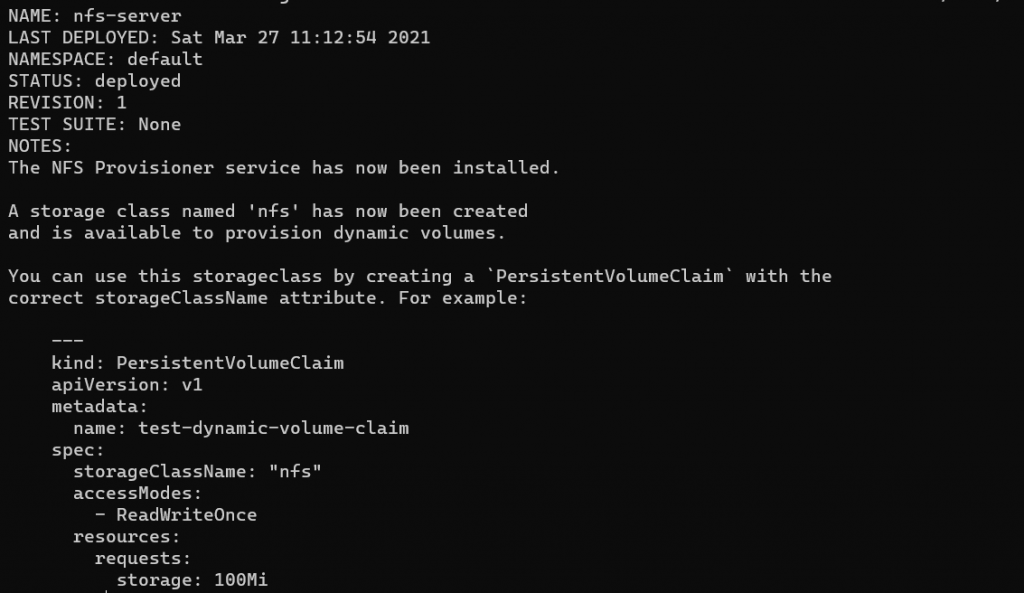

After this command completes, you will get output similar to the following:

To see the NFS server you provisioned, run the following command:

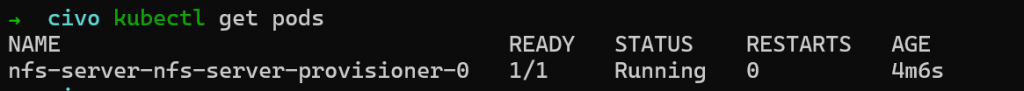

$ kubectl get pods

This will show the following:

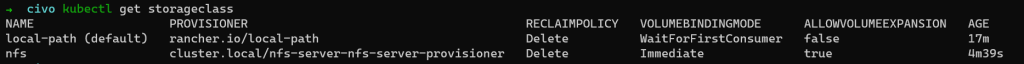

Next, check for the storageclass you created:

$ kubectl get storageclass

This will give output similar to the following:

You now have an NFS server running, as well as a storageclass that you can use for dynamic provisioning of volumes. Next, you can create a deployment that will use this storage and share it across multiple instances.

Step 2 — Deploying an Application Using a Shared PersistentVolumeClaim

In this step, you will create an example deployment on your DOKS cluster in order to test your storage setup. This will be an Nginx web server app named web.

To deploy this application, first write the YAML file to specify the deployment. Open up an nginx-test.yaml file with your text editor; this tutorial will use nano:

$ nano nginx-test.yamlIn this file, add the following lines to define the deployment with a PersistentVolumeClaim named nfs-data:

nginx-test.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: web

name: web

spec:

replicas: 1

selector:

matchLabels:

app: web

strategy: {}

template:

metadata:

labels:

app: web

spec:

containers:

- image: nginx:latest

name: nginx

resources: {}

volumeMounts:

- mountPath: /data

name: data

volumes:

- name: data

persistentVolumeClaim:

claimName: nfs-data

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-data

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi

storageClassName: nfsSave the file and exit the text editor.

This deployment is configured to use the accompanying PersistentVolumeClaim nfs-data and mount it at /data.

In the PVC definition, you will find that the storageClassName is set to nfs. This tells the cluster to satisfy this storage using the rules of the nfs storageClass you created in the previous step. The new PersistentVolumeClaim will be processed, and then an NFS share will be provisioned to satisfy the claim in the form of a Persistent Volume. The pod will attempt to mount that PVC once it has been provisioned. Once it has finished mounting, you will verify the ReadWriteMany (RWX) functionality.

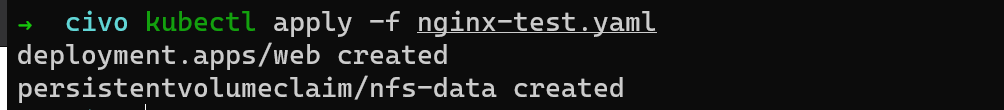

Run the deployment with the following command:

$ kubectl apply -f nginx-test.yaml

This will give the following output:

Next, check to see the web pod spinning up:

$ kubectl get pods

This will output the following:

Output

NAME READY STATUS RESTARTS AGE

nfs-server-nfs-server-provisioner-0 1/1 Running 0 23m

web-64965fc79f-b5v7w 1/1 Running 0 4mNow that the example deployment is up and running, you can scale it out to three instances using the kubectl scale command:

$ kubectl scale deployment web --replicas=3

This will give the output:

Output

deployment.extensions/web scaledNow run the kubectl get command again:

$ kubectl get pods

You will find the scaled-up instances of the deployment:

Output

NAME READY STATUS RESTARTS AGE

nfs-server-nfs-server-provisioner-0 1/1 Running 0 24m

web-64965fc79f-q9626 1/1 Running 0 5m

web-64965fc79f-qgd2w 1/1 Running 0 17s

web-64965fc79f-wcjxv 1/1 Running 0 17sYou now have three instances of your Nginx deployment that are connected into the same Persistent Volume. In the next step, you will make sure that they can share data between each other.

Step 3 —Validating NFS Data Sharing

For the final step, you will validate that the data is shared across all the instances that are mounted to the NFS share. To do this, you will create a file under the /data directory in one of the pods, then verify that the file exists in another pod’s /data directory.

To validate this, you will use the kubectl exec command. This command lets you specify a pod and perform a command inside that pod. To learn more about inspecting resources using kubectl, take a look at our kubectl Cheat Sheet.

To create a file named hello_world within one of your web pods, use the kubectl exec to pass along the touch command. Note that the number after web in the pod name will be different for you, so make sure to replace the highlighted pod name with one of your own pods that you found as the output of kubectl get pods in the last step.

$ kubectl exec web-64965fc79f-q9626 -- touch /data/hello_world

Next, change the name of the pod and use the ls command to list the files in the /data directory of a different pod:

$ kubectl exec web-64965fc79f-qgd2w -- ls /data

Your output will show the file you created within the first pod:

Output

hello_worldThis shows that all the pods share data using NFS and that your setup is working properly.

Before If you are facing an issue while mounting it, Please make sure that Nfs common utils is installed.

Conclusion

In this tutorial, you created an NFS server that was backed by Civo Block Storage. The NFS server then used that block storage to provision and export NFS shares to workloads in a RWX-compatible protocol. In doing this, you were able to get around a technical limitation of Civo block storage and share the same PVC data across many pods. In following this tutorial, your DOKS cluster is now set up to accommodate a much wider set of deployment use cases.

So, How to create ReadWriteMany (RWX) Persistent Volumes on Kubernetes with NFS. Also, check Migrating from ingress networking.k8s.io/v1beta1 to /v1

Check Out Sportsfeed for Sports News, Reviews & More. Shaurya Loans Cityhawk Fauji Farms City Hawks Sports

August 27, 2022, 9:12 am